Blog

Load balancing is a technique for distributing incoming network traffic across multiple servers. Nginx is a popular open-source web server and a reverse-proxy server that can be used for load balancing. In this article, we will discuss how to configure Nginx for load balancing using the example of Tomcat servers with applications deployed in them.

- Start the Tomcat servers and have your applications deployed in them.

- Go to the Nginx server and back up the configuration file located at /etc/nginx/nginx.conf.

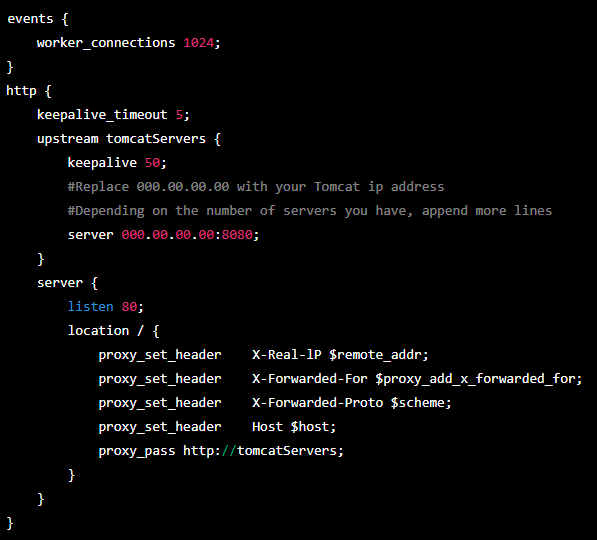

- To configure the Nginx server for load balancing, we need to edit the /etc/nginx/nginx.conf file.

- Add the following content in the nginx.conf file:

- Test the configuration by running

nginx -t - Reload the Nginx server by running

nginx -s reload - Restart the Nginx service by running

systemctl restart nginx - Go to a web browser and enter “NGINXPublicIPAddress:80/contextPath” to test the load balancing.

- If you receive a “502 Bad Gateway” error, SELinux (Security-Enhanched Linux) is in force. To bypass this, you can run the command

setsebool -P httpd_can_network_connect trueand then try accessing the context path again.

You can also make modifications to the nginx.conf file to control the load-balancing behavior of your Nginx server.

- For example, you can assign different weights to different servers so that more load is sent to one server and less to another.

- You can also configure a server as a backup so that requests only go to that server when the other servers are down.

- To do this, update the server lines in the nginx.conf file with the appropriate weight or backup settings; then, restart the Nginx service for the changes to take effect.

- In addition to the foregoing, you can also take a server down by updating the nginx.conf file by adding ‘down’ to the server line and restarting the service to allow the change to the file to take effect.

To conclude, we have seen that Nginx is a powerful tool for load balancing and can be easily configured to distribute incoming network traffic across multiple servers. This helps to prevent any single server from becoming a bottleneck. It also ensures that the system can handle a large number of concurrent requests.

Do you want to become a tech pro? Learn DevOps with us at BecomeATechPro.com. Interested in engaging tech talents for your projects? Click here to source tech talents.